The foundations of a data-driven business

What every decision-maker should know about data stacks

In many respects, data analysis is like cooking:

- The better the ingredients or “data”, the better the outcome.

- Good tools or “data infrastructure” make the process easier.

- Creativity and craftsmanship — “the human factor” — give a decisive advantage.

Using a bad knife quickly becomes an obvious issue when you’re cooking.

Using inadequate tools when you’re analysing data may not be as obvious.

Many companies spend hours hiring the right people and tracking whatever parameter is available.

However, what they frequently lack is a proper data infrastructure. All too often, this lack of infrastructure leads to bad decision making.

Many decision-makers don’t know what it takes to set up a good stack. This is not uncommon, given the speed of developments in this field.

For all those that don’t know where to start: this blog contains the fundamental components of a modern data stack.

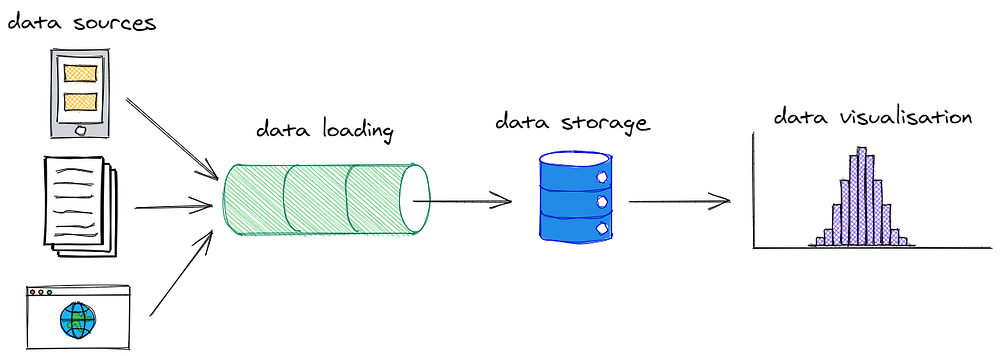

First, important considerations for decision-makers are given. Then, the various components that make up a data stack will be covered: data loading, storage, transformation and visualisation, as well as cloud service providers. At the end of this blog, the key takeaways are summarised.

What to consider as a decision-maker

Every company should set up an infrastructure with a central database that contains data ready for analysis and reporting.

This makes sure there is a single source of truth for your data.

Ok, but where to begin?

There is no one-size-fits-all solution. Your company’s use case(s) will ultimately define the data infrastructure (a.k.a. stack).

And the better the use case is defined, the better the outcome.

You will achieve a better BI infrastructure when you understand how data insights add value to your company.

Then there are two more things to consider:

- Automation. Your system should operate with the least human time input.

- Avoid vendor dependence. The last thing you want is vendor lock-in.

The larger your company grows, the more problematic this gets — especially if you pay per user.

And what if I want to do data science? Or real-time data analysis?

This is all possible. But only start with either when you have thought about:

- Justification. Real-time data is 5x-10x more costly than 60min old data. Does your use case justify that?

- Requirements. These technologies should only be applied if there is a proper data infrastructure in place. Many data science projects do not have a meaningful impact. They are built on poor data quality.

Data Loading: A classic make vs. buy decision

Depending on your company’s use case, there are two options for data loading:

- Make by using a scheduler and writing code to connect to the sources. Here at Gemma Analytics, we prefer Airflow as a scheduler to execute code written in Python.

- Buy the service of a SaaS like Stitch of Fivetran.

To decide which option suits your needs, you should ask yourself the following questions:

- Do/Will I have large amounts of data?

- Do/Will I need to store private data, like medical or religious data?

- Do/Will I have unconventional data sources, such as plentymarkets?

Is your answer “yes” to any of these questions? Then you should strongly consider a custom-built data scheduler.

Should I hire a data engineer?

There are two factors at play here:

- Speed. Hiring a data engineer is hard. With more job posts than data engineers on the market, it will test your patience.

- Cost. Hiring a senior will cost you between 80kEU and 110kEU per year.

Let’s assume you want to set up a data infrastructure from scratch. The data engineering workload is only high in the early stage. So from a cost perspective, it makes total sense to outsource his work.

It takes 52 days on average to hire a data engineer, according to the 2021 DICE Tech Salary report.

One alternative is Data Engineering as a Service. Within a week you can have data flowing into a data warehouse. Within two weeks, you can have your first dashboard ready. And that for a fraction of the cost of hiring a data engineer.

Data Storage: an ever-changing landscape

When it comes to choosing the right data storage tool for your business, the expected size of your database is a major indicator.

Regular databases

We recommend using a regular SQL database, particularly PostgreSQL, for small databases with no data table exceeding 10M records.

MPP databases

If your company requirements exceed the capability of a single PostgreSQL node, we recommend using a Massively Parallel Processing (MPP) database.

Snowflake or BigQuery are the go-to options here.

AWS Redshift was the first mover in the market. As it comes with significantly more DevOps efforts and requires regular, manual fine-tuning, we do not highly recommend Redshift anymore.

Data Lakes

For vast amounts of data, you should think of using a Data Lake. When we say vast amounts, we mean at least multiple Petabytes of data.

If you don’t know what a Data Lake is, don’t worry. Luminousmen wrote a clear article on the difference between a Data Lake and a Data Warehouse.

Data aggregation: dbt = SQL for pros

We ❤ dbt for Data Transformation!

What is dbt?

In a nutshell: dbt is an open-source tool that helps analysts and analytics engineers build solid data models.

Why do we love dbt?

Simply put, it saves hours of the analytics engineer’s work. The cost of setting up a data infrastructure is 70% to 85% determined by labour. In the long run, it will save 100s of hours of their work.

How does it reduce time exactly?

- It allows for software engineering best practices, such as data testing and version control.

- Automation of CRUD procedures by understanding the relationship between data models.

- It allows for automated lineage, saving hours of checking tables.

- Templating allows you to automate certain tasks yourself and to work DRY.

Data Visualisation: where to start?

There are so many visualisation tools out there. It would be a bad strategy to just choose one that looks good.

Instead, you need to consider these factors:

- Cost

- Complexity and size of data tables

- Degree of customisation for the end-users

We compared three archetypes of visualisation tools: Metabase, Tableau and Looker.

Metabase

Metabase is great for several reasons. The best thing about Metabase: it’s open-source and free if you decide to host it yourself.

We recommend using Metabase if your employees are knowledgeable about the data. It is also easy to set up and highly customisable by the end-user. The major downside is its simplicity. It thus lacks some sophisticated features.

Similar tools are Google Data Studio, Apache Superset and Redash

Tableau

Tableau is suitable for companies with a strict division between the creator and user of dashboards.

Tableau offers many advanced features but is less intuitive to use. Therefore, reports are generally created in a central BI department, which can be powerful if well-defined.

We do not propose Tableau to smaller organizations. Tableaus prices are 3 to 5 times higher than open-source solutions. We do recommend Tableau to companies that have already outgrown the open-source solutions in their needs or will outgrow in the next 6 months.

Similar tools are Microsoft PowerBI, SiSense and Qlik

Looker

Looker is about as good as it gets. First of all, it’s entirely web-based. Secondly, it is well integrated with external tools.

But what makes Looker truly unique is LookML. This is their version-controlled configuration language. It allows for the creation of new metrics and dimensions inside Looker.

LookML is what lets Looker users easily explore, drill through and manipulate data. It also avoids the pitfalls of self-service.

Unfortunately, this superiority comes at a price. Even for small organisations, it will cost you thousands of euros per month.

We recommend Looker to large organisations that require a high degree of self-service analytics while having a high amount of data tables. Your organisation needs to be able and willing to maintain LookML.

Cloud Service Provider: keep it simple

Finally, you may need a service provider if you self-host any of the tools discussed above.

The main players here are AWS, Google Cloud and Azure. We do not recommend any of these providers over the others. If your company already uses a CSP, we recommend using the same for the data stack.

If you want to go down the rabbit hole: here is an excellent article that could help you to decide between AWS and Google Cloud.

There is also the possibility to work on-premise or even hybrid. We only recommend this if your use case justifies it. An example would be data with specific security requirements such as personal health data.

Final thoughts

Becoming data-driven is much more than just connecting the dots. It is a culture that needs to evolve. Connecting these dots, however, is the first crucial step towards data literacy.

If you want to achieve data literacy for your organisation, remember:

- Connecting data sources enables data-driven decision making. First, you need to move all relevant data into a data warehouse. From there on, you can create data models and visualisations.

- Your company profile and use cases determine the data stack.

- Think twice before setting up a data infrastructure in-house. Sometimes it makes sense to internalize the analytics function from day 1. Other times it is helpful to talk to people first who have been there, done that. They can help you to understand the needs and the potential data stack.

This was a small glimpse into data stacks for analytics. Any comments on the article? Or interested in Analytics as a Service? Get in touch with us.